The Three Forms of Artificial Intelligence

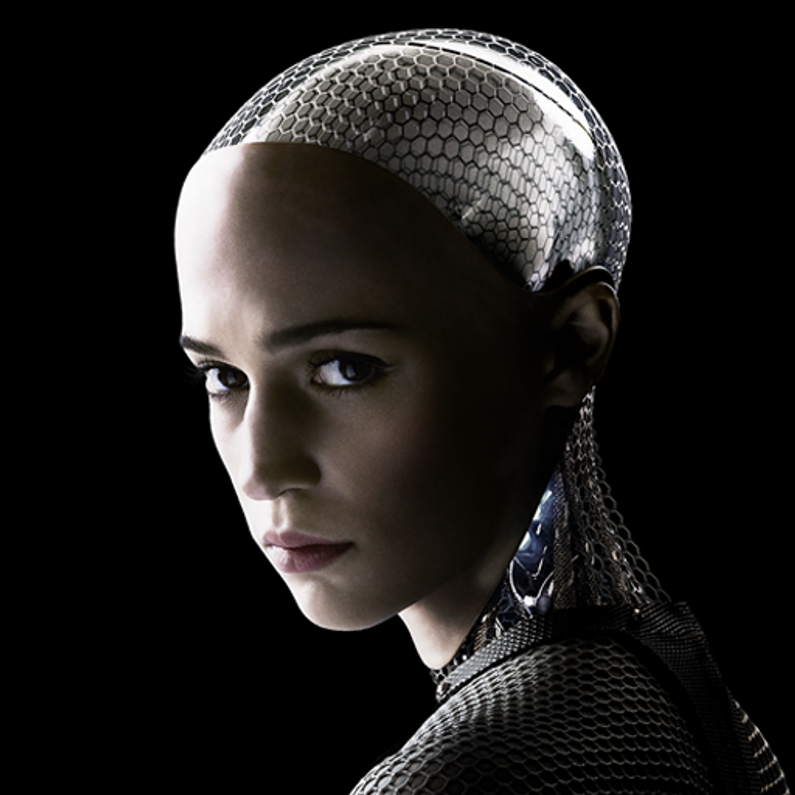

If the movie Ex Machina scares you about AI, understanding the three major types of artificial intelligence may help. Experts disagree on the implications of AI, largely because AI is such a broad term. As we previously reviewed in the blog about the term “IoT” and what it includes, AI is in the same boat.

Artificial Narrow Intelligence (Weak AI)

Ask Siri to explain herself to you because Siri’s specialized intelligence algorithm falls into the Weak AI category. Weak AI outperforms task-related human capabilities but that’s about it. Siri does not develop her own aspirations because she has no self-awareness. Applications like Siri do, however, feed our ever-growing addiction to technology, which is a concern to some.

Artificial General Intelligence (Strong AI)

The Strong AI category includes machines that learn through reasoning. They can navigate problems by determining the best possible solution in a creative and strategic manner. Experts anticipate this segment to be the next revolutionary step in AI. Some organizations, like Google’s DeepMind venture and the Allen Institute for Artificial Intelligence, aim to use AI for the common good of humanity. Some concerns with this level of AI are privacy, database management, hacking and military use.

Artificial Superintelligence (Superintelligence)

This is the scary AI of movies. Superintelligence references a machine that “radically outperforms the best human minds in every field, including scientific creativity, general wisdom and social skills,” says Nick Bostrom, a philosopher at Oxford. Additionally, he says that Superintelligence would allow a machine to "shape the future according to its preferences.” The race to develop Superintelligence comes with hefty incentives: enormous profits, plus political and military dominance.

Bostrom emphasizes that although we may be up to 300 years away from Superintelligence, researchers and developers should consider the “control problem.” If Superintelligence means the machines could develop their own goals, those goals are potentially incompatible with that of humans, not to mention other living things.

Machines will never have the one thing that sets humans apart – values. Superintelligent machines will not be guided by self-developed values but rather self-preservation. Bostrom emphasizes that this is where things could “go radically wrong.” But that’s a few hundred years away…right?

Photo Credit: "Ex Machina"

Recent Posts

-

Exploring the Power of Collaborative Robots in Heavy Payload Production

Are you still convinced that traditional robots are the best solution for handling heavy payload pro …May 9th 2024 -

Best Methods for Connecting Older Devices and Machines for Data Analysis

In today's rapidly advancing technological landscape, data analysis has become a cornerstone for inf …May 6th 2024 -

Introducing the Turck Q130 HF Read/Write Head: Revolutionizing RFID Data Management

In today's fast-paced industrial landscape, efficiency and accuracy are paramount. The ability to se …Apr 30th 2024